NEW

Artist Interview: Bill Evans

It’s an open secret that vocals are routinely edited during post-production, where recordings are corrected if the singer gets the note wrong. The term autotune firmly established itself as a musical term after it appeared on Cher’s 1998 hit single Believe; the sound effect was deliberately exaggerated in the song, and received lots of publicity thanks to the single’s wide commercial success. Instrumentals can also be corrected by re-recording and editing if there are any kinks during studio recording.

This desire for perfection has a lot to do with the audience’s aesthetic expectations.

But it begs the question: How authentic is a musical performance if any so-called “error” in a melody or chord is retouched? To what extend does the “magic of the moment” gets lost?

This is what preoccupies Bill Evans. He has made a name for himself by editing technical errors made by virtuoso musicians in live performances, on stage or in a studio, without sacrificing authenticity in the process. His portfolio includes legends like Steve Lukather (Toto), Steve Morse (Deep Purple), Steve Vai (Frank Zappa) and Mike Portnoy (Dream Theater).

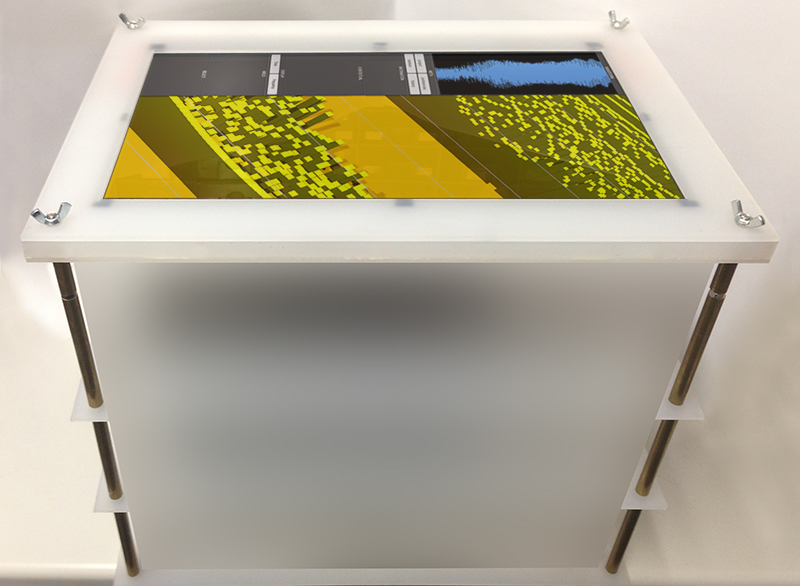

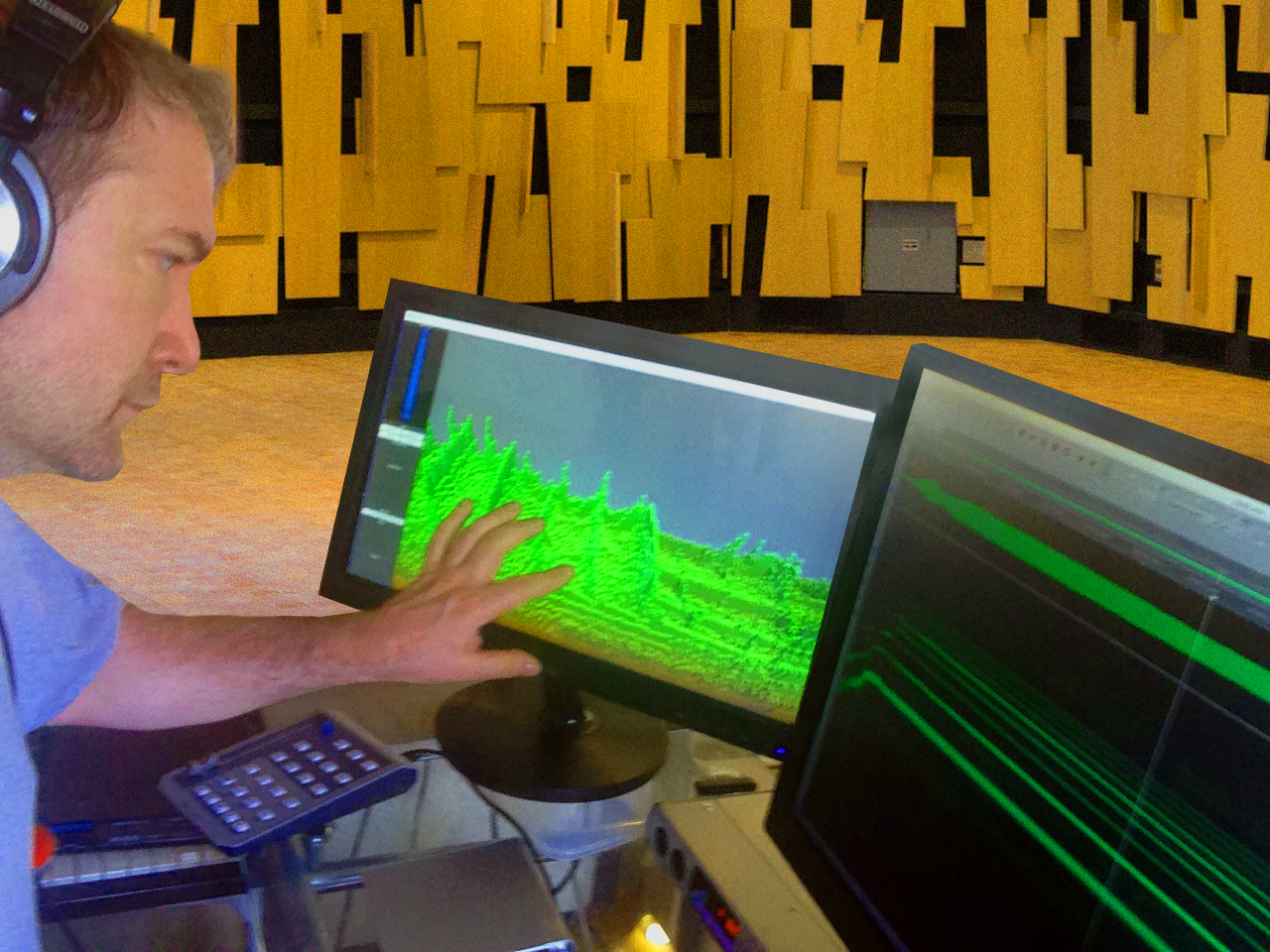

His PhD thesis at the Manchester Metropolitan University is the result of intense research and experience in the specialized area of post production. He calls his method “Harmonic Phrase Analysis and Restoration”, or HPAR. His tools include the latest and in part self-developed technologies that are truly futuristic. In addition to SpectraLayers Pro, he also uses Bexeles, a Virtual Audio Workstation (VAW), which is of his own making. It can be operated using eye/head tracking, as well as via volumetrically haptic display (VHD). The display projects a three-dimensional haptic sensory field into space. By manipulating the field using his hands, Evans is able to shape sound like clay.

In his interview, Bill Evans gives key insights into his work with next generation music technologies, his HPAR method, and explains how SpectraLayers revolutionized audio editing.

Bill Evans – “Words like ‘mistake’ and ‘fix’ are really loaded terms”

One of your PhD theses was about the development of a technological and musical approach named Harmonic Phrase Analysis and Restoration (HPAR), which creates additional clarity in multitrack digital recordings, and resolves performance errors (especially in live performances) without a loss of authenticity. Can you briefly explain, how the HPAR-principle works?

My approach begins with a reexamination of what a mistake is in a recording—and what it would therefore mean to fix it. Words like “mistake” and “fix” are really loaded terms—whatever meaning we imbue them with has lasting effects far beyond whatever process is used to deal with them in the studio. The music industry defines a mistake as an instance where the listener consciously perceives that the performer’s output was incorrect; and therefore, can be fixed. But is that what music is really about?

If capturing great performances is our goal, then couldn’t we also define an error as when the sound produced by performers didn’t match their intention? The response shouldn’t be, “Did anyone notice that I screwed up?” A more musical one is, “That is not what I had in my head—that’s not what I meant to say—and not how it was supposed to sound.”

Once you define performances in these terms, you can change your goal from hiding mistakes, to restoring the originally intended performance. That’s the goal of my technique—to predict what the performer meant to play, and how it would sound. The process is a bit like archeology mixed with forensic science and old-fashioned detective work.

The process isn’t perfect, and requires continual refinement along the way. It’s informed by multiple areas such as music theory, cognitive science, psychoacoustics, signal processing, and familiarity with the performer’s repertoire. It draws from the large body of scientific knowledge regarding how humans cognitively and physically plan the musical and physical aspects of performances—when this process goes awry, clues are left as to original intention. Combined with an examination how listeners experience sound and music, and the common aspects of a performance (e.g. phrasing), a picture can begin to emerge of the intended performance.

What is „Belexes“, the virtual audio workstation (VAW) that you developed as part of your other thesis? Can you explain the basic idea behind the hardware/software to us?

Belexes is a new type of hardware/software audio workstation, designed to support a new paradigm for audio editing: direct, manual manipulation of sound. Current audio editing software presents us with many different ways to visualize sound, and a set of computer interfaces to go with them. Belexes asks the question, “What if instead of going through a computer program, you could manipulate sound with your hands, and shape it like clay?”

Belexes answers the question with a very different way of looking at and working with audio. It’s the world’s first 3D audio editing system, which was required for audio to be interacted with as a 3D object. All of the operations are performed using 3D multitouch actions; some are on the screen like a traditional touchscreen, and some are performed in mid-air. To help simulate haptics, I created the first voxel-addressable force-field projection system: the Volumetric Haptic Display. In combination with eye and head tracking, the engineer experiences audio as a fully responsive 3D physical object, but without the requirements of any external encumbrance such as VR glasses, gloves, or markers.

The name “Belexes” comes from a Kansas song written by Kerry Livgren. Kerry comes up with great names. This one refers to the title character of the song, which describes Belexes’ adventures in…well, I’m not exactly sure…I do know Belexes is a character…I remember Kerry telling me that. I also remember him then telling me to please stop asking him about Kansas songs. To this day, many fans (myself included) believe the song to be an allegory for the secretive techniques employed by badgers to genetically engineer cabbage within the context of a post-agrarian society. [Note: Kerry just reminded me it was actually carrots.]

Do you consider your work as an art form? Or is it purely technical optimization? What kind of creative work do you do outside of the studio?

A bit of both…like musical performance, itself. Sometimes I wish it was more purely technical, but I can best describe the process as, acting like an engineer, and thinking like a musician.

There’s an “outside”?

SpectraLayers Pro – “SpectraLayers is one of those applications that blurs the line between technology and magic”.

Why did you choose SpectraLayers Pro 4 and what makes this program so special in your opinion?

I feel like SpectraLayers almost chose me! There’s nothing else that even attempts to do what it does! It’s like Photoshop for audio. The work Tom and I do with Harmonic Phrase Analysis and Performance Restoration would be impossible without SpectraLayers. Just like most digital photo editing wouldn’t be possible without a program like Photoshop.

I don’t mean to imply you need to be doing audio science to use SpectraLayers, or to benefit from it. As with Photoshop, there’s a ton of ways to use it, at every level of expertise, and with a wide range of applications.

Currently, most audio editing is done with waveforms—and that was revolutionary when it became available in the 1970s. What Robin Lobel did in inventing SpectraLayers was to create the next revolution in how audio can be visualized and edited. He didn’t invent spectrograms—he made them more useful. And because he based the program on a skill set many of us already have using Photoshop, the tools all instantly make sense.

SpectraLayers is one of those applications that blurs the line between technology and magic. And competitively, it allows me to do things other engineers can’t do—especially when working with virtuoso artists, where every detail is critical.

Which tools and features do you use the most in SpectraLayers Pro 4?

I really like to get inside the audio to uncover the music that may be hiding. The first thing SL does is reveal where the potential is to make sound more clear and organic…to see where the recording process may have obscured things, and to identify the specific parts that evoke emotion.

Sometimes, this means using the harmonic tools to isolate specific harmonics, or using the harmonic series tool to examine an entire series. Using Layer’s multiple tracks features, I can selectively remove sections of audio, and audition what remains—or just the selection that was removed.

The eraser and dodge tools are remarkable, especially because (like in Photoshop) you can set the edge sharpness and opacity. Just with those two tools, you can do incredible things. They allow me to selectively bring out, or obscure, very precise elements of sound, and as a result, music. The clone tool is fantastic for copying parts of sounds. Often, I’ll hear something in part of a sound that I’ll want to apply to another, and the clone tool makes this possible.

There’s also a feature in the next version of SpectraLayers that I’m not supposed to talk about…all I can say is it revolutionizes what can be seen with a Spectrogram. In order to fix something—you have to be able to see it, first!

You often share your thoughts with the developer of SpectraLayers Pro, Robin Lobel. What do you mainly talk with him about? Or are you just talking shop?

You often share your thoughts with the developer of SpectraLayers Pro, Robin Lobel. What do you mainly talk with him about? Or are you just talking shop?

Oddly enough, we talk about donuts a lot! It’s a subject that people just don’t seem to focus on any more. Which is a shame. They’re delicious.

Speaking with the creator of a favourite tool is like talking to one of your favourite musical artists. When we do talk shop, Robin helps me understand some of his deeper thinking in the program, which I can then use to inform new techniques and processes. From the other side, I’ll share these techniques with him, which will in turn, provide inspiration for the evolution of SpectraLayer’s feature set.

I also like to ask him support questions because I actively avoid manuals. (He always answers by emailing the manual to me.)

Performance-Restoration – “All the current ways to ‘fix mistakes’ remove what makes a performance great in the first place.”

In general, mixing and mastering are more well-known than audio/performance-restoration or error analysis. How would you describe to someone who’s not in this field, what exactly you do and why your knowledge is essential for the listener’s experience?

At its core, I believe great music is about great performances. So before mixing and mastering even enter the equation, you need those performances on the recording. But modern cultural and industrial expectations of technical perfection have pushed musicians away from these performances—and toward ones that are safer, less expressive, and unemotional—in a word, less authentic.

Whether at a Demi Lovato concert, or listening to a Periphery album, audiences expect “perfect” performances. A flatly sung phrase, forgotten lyric, accidental guitar pickup hit, or flubbed guitar note—these are the signs of an amateur. But for many reasons—even the most prepared and practiced musicians are not immune from these occurrences—especially when recording a live performance.

The problem is that all the current ways to “fix” these “mistakes” removes what makes a performance great in the first place—the continuous, unedited, linear flow of expression that emerges only from a specific time, place and mindset.

The most common way of dealing with “mistakes” is to re-record the problematic section. But you can’t go back in time—you can’t recreate the moment—so you can’t recreate what that performance would have been. It doesn’t matter if you’re Beyoncé or Buckethead. This isn’t to criticize artists for doing this—it’s a necessity, not a choice.

The other common method is for the recording engineer to “fix” it. The goal of this approach is obscure the error—to hide it by preventing it from entering the listener’s conscious mind. It is even less authentic-sounding than re-recording because the new musical sections were never performed by the artist, and therefore do not represent that artist’s intended expression. As with musicians, I’m not criticizing engineers for practice—one way or another, anything that audiences can interpret as errors must be dealt with.

But there is a price. Artists are forced to take fewer chances, and as a direct result, be less expressive. Even so, “mistakes” do (inevitably) occur—even artists like Aretha Franklin and Steve Morse make them regularly. This results in the final “performance” being watered down even more, through re-recording and audio editing. Before mixing and mastering can occur, the questions emerge, “What does this recording even mean, and what is its value?”

I believe artists should be able to express themselves without fear of being judged for their errors. My aim is to help make that possible.

Which artists and musicians trust your expertise regarding performance restoration?

Sure. I mostly work with “virtuoso” artists (especially guitarists), where clarity is essential for the listener to perceive all the details of their extraordinary performances.

I’ll rarely just do performance restoration on a project. My process (Harmonic Phrase Analysis and Restoration) doesn’t only restore performances, but also enhances the sonic quality and audible character of the performance. My original goal as a producer and engineer, was to create recordings with exceptional clarity—especially live recordings. Along the way, one of the surprising things I discovered in my research was that the technological tools required for solving this problem also applied to address the restoration of performances.

To do both, recordings must be broken down to separate musical and sound-based components. And from there, down to their atomic building blocks. Once you’ve done that, you can reconstitute audio in new and innovative ways, based on how we perceive sound and music.

When I’m asked to work with an artist, it’s usually about bringing out the performance, removing whatever impediments there may be for the listener, and empowering the mix engineer with the enhanced clarity and tone. All of it falls under the rubric of restoration, because the performance has already occurred.

This can apply to a live recording, where there is a lot of excess noise…or a studio recording where the recording process was secondary to the musical one. (You might have a great performance, but part-way through, the microphone on the guitar amp got kicked around so it was facing the audience.) Or, you just might want a mix that provides the maximum amount of musical detail of a perfectly-recorded studio performance.

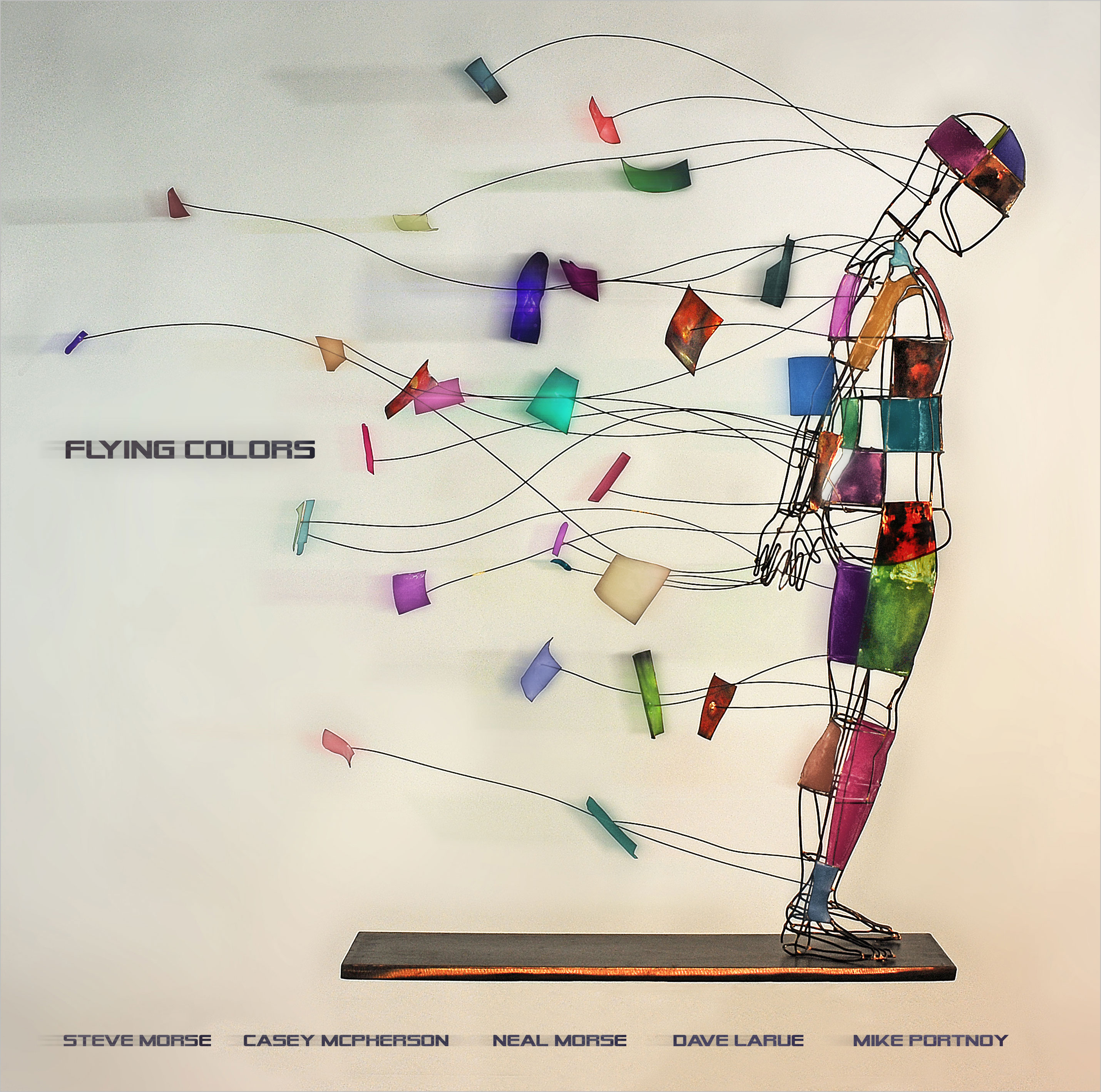

Since establishing these techniques in 2015, I’ve worked on recordings by artists such as Albert Lee, Mike Portnoy, Dave LaRue, Neal Morse, Steve Lukather, Sterling Ball, Steve Vai, Marco Minnemann, John Wesley, John Petrucci and Jay Graydon. The group I most commonly work with is Flying Colors, and (individually) their members (Steve Morse, Dave LaRue, Casey McPherson, Neal Morse, and Mike Portnoy).

There’s also a ferret named Malamo that I’ve been training to sort tiny screws. (So far, he just squeaks and steals my keys.)

Which projects have you enjoyed the most and which one was the most challenging one?

I began working with Kerry Livgren when I first graduated from college in 2001, before developing Harmonic Phrase Analysis & Restoration (HPAR). I started as his webmaster, and grew to work on a wide range of projects, such as management of his second post-Kansas band, Proto-Kaw. (Although I think the thing that most amused him was my repeated attempts to back into his driveway, the first time we met.) I’d put any project we’ve done up there as most enjoyable. Kerry is an extraordinary artist, teacher, and friend.

The most challenging project, so far, has been album that debuted HPAR: Flying Colors’ Second Flight: Live at the Z7. This is a band of virtuoso musicians and incredibly expressive singers. My first and foremost goal was to present clarity that had never been experienced on a live recording. Fortunately, by then, I was also working with (assistant engineer) Tom Price, who was invaluable during the process. Mix engineer Rich Mouser was the key to bringing all the sounds to life. So it was really a team effort.

How do you deal with the problem that some mistakes by live musicians can be seen in the footage and the correction doesn’t match with what is visually played—for example, when the video material is so good that an important passage, including the mistake, shouldn’t be replaced?

That’s a good question! Typically, you cut away or go to a wide shot. A major reason is that the most common solution to performance errors is re-recording. It’s no secret that large sections of many (or most) commercial live releases are re-recorded. Sometimes this is simply done to improve clarity, irrespective of the performance. But when it comes to solos and singing, it’s never possible to replicate the original timing and articulation (which can also be seen).

One of the discoveries to emerge from my research was that cognitively, before a performer moves his hands, the brain builds a detailed motor program that specifies how the numerous muscles in the body will work together to perform an action (such as fretting a guitar note). One guitar note can be the result of hundreds of carefully timed muscle-movements, most of which the performer is not even aware of—and if even one of them goes wrong, then the result can be an audible mistake. But most of the body’s movements were still correct. When the performer re-records that section of music in the studio, he cannot replicate those exact movements—only try to match what he played. So, the camera needs to cut away once the re-recorded performance is pasted in over the video of the performer’s original movements on stage.

With performance restoration, we aim to restore the precise, intended result of the performer’s original motor program. Therefore, when the original footage is shown, it looks like the musician is creating the intended sounds, and his visual movements correspond to them.

Somewhere in the video image, the original motor program mistake may be visible. But remember that music occurs only in our minds, and a wide variety of cognitive processes, most of which we’re not aware of, work together to create the sensation we experience as music. Likewise, what we see is a product of visual processing in our brains—images are altered in our brain using heuristics, and much of what we “see” is actually a reflection of how we “feel” about what we see.

Studies have demonstrated that just by looking at performer’s motions (resulting from motor programs), audiences can tell a tremendous amount about what a performer is trying to do. If the audio produced (after HPAR) matches what the performer’s motions closely enough, our brains may remove the parts of the visual information that doesn’t match the audio—so we never notice that there’s a small muscle movement that doesn’t exactly correspond with what we hear. Everything matches up.

One could say that mistakes also reflect the soul (or the magic in the moment) of the performance. So, where do you draw the line regarding correction of live-recordings? Is it a musical, ethical, or philosophic line? How do you manage the balancing act between creating the proper mix of correcting imperfections and the authenticity of a performance?

That’s a good question! I don’t really try to fix mistakes. I don’t believe it’s possible, or generally desirable. The only way to fix a mistake is to go back in time, and so far iZotope hasn’t made a plugin for that.

We can reframe the question by looking at what happens when a performer makes a mistake. Specifically, let’s look at an improvised solo, because that’s where the most can go wrong. First, the performer will create a hierarchy of musical structures in his mind; you can think of the solo as a tree with expanding branches, and the leaves are the actual notes. The brain then sends this hierarchy further back in the mind to create a hierarchical motor control program. In the tree analogy, the leaves are the specific muscles to be moved. Much of this process is unconscious—it has to be—our conscious mind has a very limited capacity of about eight things to remember at the same time.

A mistake can occur in either the building of either the musical or motor control programs, in their initial design, or may be interrupted by the conscious mind during execution. (For example, if someone in a concert arena thinks their flash will help make for a better picture, but instead momentarily blinds the performer.)

What I aim to do in HPAR is, is circumvent time—I can’t change the past, but I can reveal what happened when performances occurred to inform the present and change the future. If I can recover parts of the original musical and motor programs, then I have parts of the puzzle which can restore the originally intended performance. I can predict how it would have sounded, and then work toward creating those sounds.

In my view, this is the most authentic way of dealing the performance errors, because at no point am I “fixing” what the performer did. Instead, I’m rolling back time to determine the performer’s original intention, and restoring that—whatever it may have been. If I’m successful, I haven’t fixed anything in the usual sense of the word.

Next Post >

Artist Interview: Brisk Fingaz

< Previous Post

Artist Interview: Heba Kadry

Related Posts

Interview with Bombay Bicycle Club frontman Jack Steadman.

Magix Audio interview with Jack Steadman, frontman of British indie rock band Bombay Bicycle Club about his work with Samplitude.

Artist Interview: Bob Humid

In our interview Bob Humid, a sound designer and mastering engineer, talks with us about his tips and tricks for the perfect sound design for movies.

From Music Maker to Samplitude Music Studio

Use your Music Maker skills in Samplitude Music Studio. Here we tell all why you won’t regret an upgrade!

SOUND FORGE Artist Feature: Live Sets Chicago

Live Sets Chicago documents DJ sets of acts like Mark Farina, Sander Kleinenberg, with the help of SOUND FORGE Pro Mac software.